Truenas 25.04 CE used for this guide

TrueNas philosophy is “1 device - 1 assignment”. So by doing this we are going off-piste and who knows what could happen. Please proceed only if You have backup of Your data and You know what You are doing.

Identify the SSD with the command lsblk

For instance:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 10.9T 0 disk

└─sda1 8:1 0 10.9T 0 part

sdb 8:16 0 3.6T 0 disk

└─sdb1 8:17 0 3.6T 0 part

sdc 8:32 0 3.6T 0 disk

└─sdc1 8:33 0 3.6T 0 part

sdd 8:48 0 3.6T 0 disk

└─sdd1 8:49 0 3.6T 0 part

nvme1n1 259:0 0 119.2G 0 disk

├─nvme1n1p1 259:1 0 1M 0 part

├─nvme1n1p2 259:2 0 512M 0 part

└─nvme1n1p3 259:3 0 118.7G 0 part

nvme0n1 259:4 0 953.9G 0 disk

└─nvme0n1p1 259:5 0 951.9G 0 part

nvme2n1 259:6 0 238.5G 0 disk

we’ll take the example with nvme2n1 and we’ll use the parted command for it:

parted /dev/sdX

Inside parted:

(parted) mklabel gpt

(parted) mkpart primary 0% 50% # First partition: 0% to 50%

(parted) mkpart primary 50% 100% # Second partition: 50% to 100%

(parted) print

(parted) quit

Check the result

checking result with lsblk command:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 10.9T 0 disk

└─sda1 8:1 0 10.9T 0 part

sdb 8:16 0 3.6T 0 disk

└─sdb1 8:17 0 3.6T 0 part

sdc 8:32 0 3.6T 0 disk

└─sdc1 8:33 0 3.6T 0 part

sdd 8:48 0 3.6T 0 disk

└─sdd1 8:49 0 3.6T 0 part

nvme1n1 259:0 0 119.2G 0 disk

├─nvme1n1p1 259:1 0 1M 0 part

├─nvme1n1p2 259:2 0 512M 0 part

└─nvme1n1p3 259:3 0 118.7G 0 part

nvme0n1 259:4 0 953.9G 0 disk

└─nvme0n1p1 259:5 0 951.9G 0 part

nvme2n1 259:6 0 238.5G 0 disk

├─nvme2n1p1 259:9 0 178.9G 0 part

└─nvme2n1p2 259:10 0 59.6G 0 part

Add partitions to the pool

Get partitions PARTUUID

To get PARTUUID of newly created partitions we are going to use this command (of course replace /dev/nvme2* with your device):

blkid /dev/nvme2* | awk -F: '{print $1}' | while read dev; do echo "$dev $(blkid -s PARTUUID -o value $dev)"; done

Should get back something like this:

/dev/nvme2n1

/dev/nvme2n1p1 7d6ee620-6ea8-48d9-b16a-de569386d790

/dev/nvme2n1p2 18f5cb53-635d-4cad-a8cf-00423ac5ff6f

We are interested here in two values:

18f5cb53-635d-4cad-a8cf-00423ac5ff6f and 7d6ee620-6ea8-48d9-b16a-de569386d790

We are going to use those values to add partitions attached to pools

Add partitions to the pools as cache

So this is command to add partition /dev/nvme2n1p2 to the pool pool1 as cache.

General recommendation, often overlooked: Ensure your SSDs are configured with native block size. Some SSDs, especially Samsung, like to pretend they have 512-byte sectors, however that is not the case. When you are adding such device to a pool, override the sector size with the ashift parameter. For example, to add most SSDs as a L2ARC device, force 4096 sector size with this:

zpool add -o ashift=12 pool1 cache 18f5cb53-635d-4cad-a8cf-00423ac5ff6f

Adding partition to Truenas pool taken from here: Notes on storage node performance optimization on ZFS - Node Operators - Storj Community Forum (official)

Confirm result

use command (it’s slightly sophisticated, to hide irevelant info)

zpool list -v -o name,size,alloc,free pool1 | awk '{printf "%-42s %6s %6s %6s\n", $1, $2, $3, $4}'

regular command could be used as well: zpool list -v pool1

Example output (last columns stripped for better formatting)

NAME SIZE ALLOC FREE

pool1 10.9T 4.06T 6.85T

raidz1-0 10.9T 4.06T 6.85T

3c04f950-aadc-4579-8d46-a54d0381de8a 3.64T - -

6975fa15-bcec-4976-841d-33a15dc2d4ea 3.64T - -

e6bb2f94-4307-4bfd-bf9e-506d465f9c2f 3.64T - -

cache - - -

7d6ee620-6ea8-48d9-b16a-de569386d790 128G 6.32M 128G

As we can see, we can see our partition acting as cache. We could do the same procedure and add another partition on the same SSD to another pool

GUI

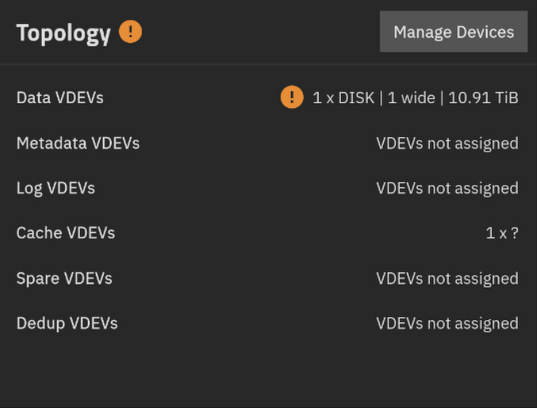

in the GUI it will be nonsense, as this is non officially supported by Truenas:

One Pool would show something like this:

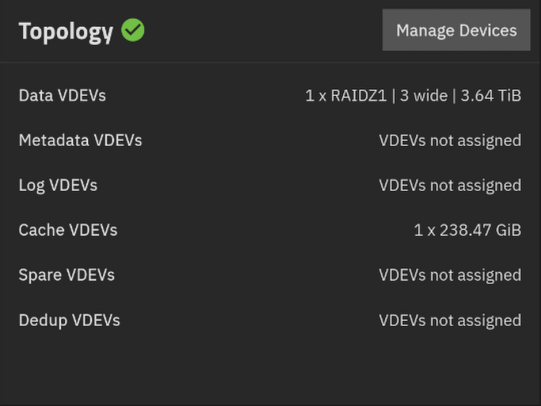

And another one:

And another one: